Big Tech Is Scorching The Electric Grid

And consumers are getting stuck with the bill. These six charts illustrate how the AI frenzy is fueling the inflation that’s slamming the power sector.

In 1946, when ENIAC, the world’s first general-purpose computer, was first turned on, it used so much power (about 174 kilowatts) that it caused the lights in Philadelphia to dim momentarily.

Six years later, John von Neumann, the mathematician and computer pioneer, unveiled MANIAC, short for Mathematical Analyzer Numerical Integrator and Automatic Computer, the first computer to use RAM (random access memory). MANIAC was far more efficient than ENIAC, drawing about 19.5 kilowatts, or one-ninth the power needed by its predecessor. Author George Dyson has written that, “the entire digital universe can be traced directly” back to MANIAC.

As I explained in May 2024, the energy efficiency of our computers has continually improved since the days of ENIAC and MANIAC. And while the efficiency of our digital machinery has increased:

The power hungry nature of computing has not. The Computer Age has been defined by the quest for ever-more computing power and ever-increasing amounts of electricity to fuel our insatiable desire for more digital horsepower. As data centers have grown over the past two decades, concerns about power availability have surged.

Today, the US is facing an unprecedented power crunch. After two decades of flat electricity demand, power use is suddenly soaring as the world’s biggest tech companies race to build massive data centers running thousands of AI computers that will use stunning volumes of juice. On August 11, the Electric Power Research Institute estimated that AI’s existing power demand is approximately 5 gigawatts, but that demand could reach 50 GW by 2030. For perspective, 50 GW is larger than the total electric generation capacity in Pennsylvania, which has a population of 13 million people and is the fifth most-populous state in America. (Pennsylvania currently has about 49 GW of electric generation capacity.)

On August 27, Monitoring Analytics, the independent market monitor for PJM, the largest regional transmission organization in the US, warned that the costs associated with meeting the surging demand for AI could result in a “massive wealth transfer” from ratepayers to Big Tech. The way to avoid that, it said, was for data center operators to “bring their own new generation.” In other words, PJM should require Big Tech to build its own power plants rather than simply connecting to the existing grid. PJM has previously projected that it expects peak loads on its system, due to data centers, to jump by 30 GW by 2030.

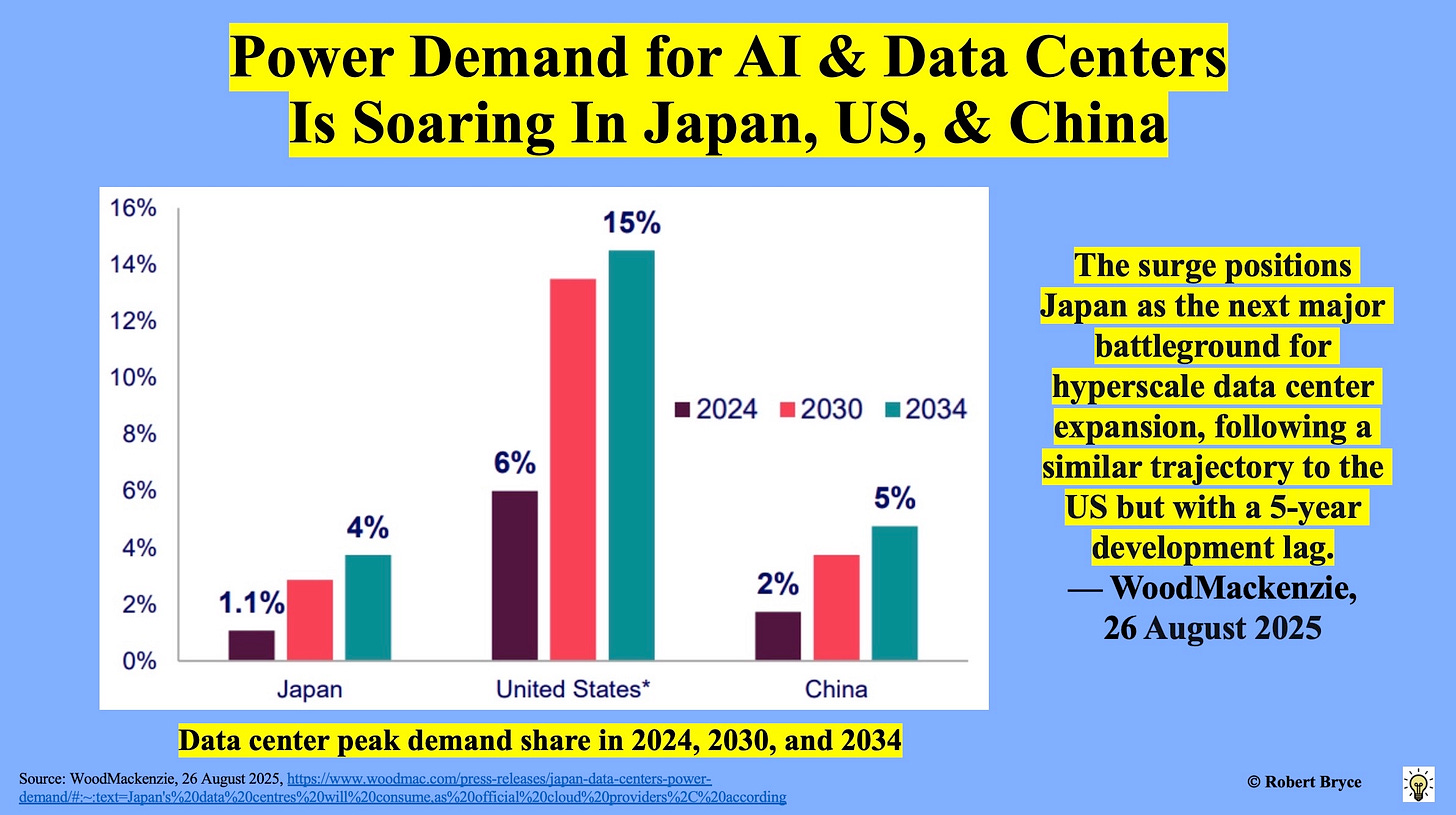

On Wednesday, Wood Mackenzie, the global energy consulting firm, reported that data centers used about 6% of all US electricity in 2024, and that share could increase to 13% by 2030. As seen above, it’s not just the US. The consultancy said that data center demand is surging in Japan and China, and that Japan will be the “next major battleground for hyperscale data center expansion.”

The global surge in power demand for AI has led to soaring prices for generators, transformers, and other equipment needed to generate and distribute electricity. In short, Big Tech is scorching the grid. The rush to build dozens of gigawatts of generation capacity to fuel the insatiable power needs of AI has ignited a surge of inflation that is tearing through electric sector supply chains, generation plants, and distribution systems.

The proof is in the price increases.

Let’s take a look.

Keep reading with a 7-day free trial

Subscribe to Robert Bryce to keep reading this post and get 7 days of free access to the full post archives.